When San Francisco State University was introduced to the rising technology of generative AI, some professors were quick to ask questions and wonder about the possibilities. Professors and faculty formed discussion panels with speakers from different departments, disciplines and Califonia State University-funded initiatives to study how AI develops learning and teaching.

Professors at SFSU are generally given the autonomy to determine their approach to utilizing generative AI in their classes — whether integrating it into courses or imposing limitations and bans. Currently, there is no consensus on the use of AI on campus.

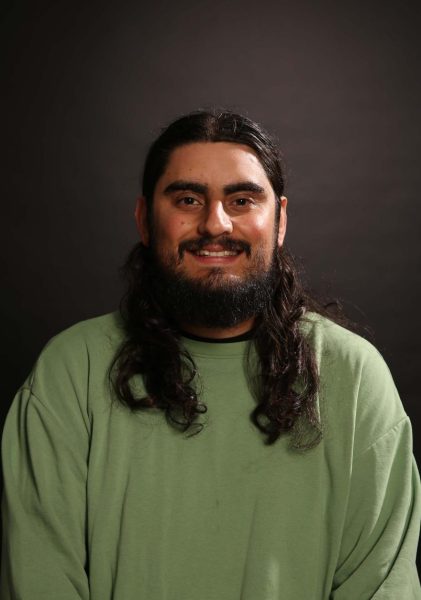

David Gill, who has taught at the Department of English Language & Literature at SFSU since 2006, said there’s no consensus regarding AI usage in the English department. He gave a presentation last year on why he thinks AI is good.

“There were probably 35 or 40 English teachers in the room, and boy, they didn’t like what I was saying,” Gill said.

Gill teaches English 114, Writing the First Year: Finding Your Voice. Besides being an English teacher, Gill is a science fiction author and a fan.

Gill says he doesn’t necessarily want his students writing essays with AI. He uses AI more contextually, which helps build an environment where using AI to write essays is difficult.

“I have them turn in an AI-generated essay on the assigned prompt as part of the homework,” Gill said “We use that as a tool to see what’s good about this essay that the AI-generated, what’s bad about it and occasionally, you’ll get some really good ones that the students can create with AI.”

What’s interesting to Gill about the AI-generated essays is that good writers create sound AI output, while bad writers create AI with bad output. He said the essays could be sharper because they have grammar issues and the quality decreases, so skill is involved.

Gill sees language as a tool for both communication and discrimination. Proper grammar can create an advantage for those who can afford to learn it, making it an unequal playing field. He views ChatGPT as a Robin Hood, leveling the field for everyone.

“It can empower people who struggle with writing and elevate their ideas to legitimacy, where they can then take those ideas, and we can really have a battle, and everybody can be heard,” Gill said.

AI can assist SFSU students in developing strong writing skills, which are vital according to Gill, who revealed in a survey a decade ago that writing skills were a weak point among employers hiring SFSU graduates.

“The one result we got back over and over again was, ‘Your students can’t write, these graduates can’t write,’ and it threw the English department into a panic,” Gill said.

After that survey, the English department did things like throw out literature courses so students coming in aren’t reading novels; they’re just writing essays, Gill said. But he added, the new focus on writing hasn’t helped.

“We’re at the same place now that we were,” Gill said. “It’s been counterproductive, even. Here, we have an issue where I think ChatGPT can be effective and I think that’s what employers are going to want.”

Jennifer Trainor, an English professor, is leading a CSU-funded series on AI in higher education. She is partnering with SFSU’s Center for Equity and Excellence in Teaching and Learning (CEETL) to develop learning circles and teacher resources.

“We’ve held a couple of sessions on academic integrity in the age of AI and on teaching with AI or using AI, how it works,” Trainor said. “We focus specifically on ChatGPT for that one, just because that’s the one everyone’s heard of.”

With AI growing, Trainor, who teaches undergraduate writing courses and graduate courses on literacy, critical pedagogy, and composition pedagogy, said CEETL and Academic Technology are trying to have conversations with other CSU campuses and universities across the country to gauge feedback and see how those different campuses and universities are dealing with AI.

“There are tons of universities who already have AI resource pages for faculty in place that are more fleshed out than others,” Trainor said. “Those we are drawing on as we create learning and discussion opportunities for faculty.”

Trainor sees generative AI as a promising tool for writing and creativity but believes it will take time to reach its full potential. She looks forward to AI augmenting human abilities and enhancing teaching and engagement.

“If it’s a tool, it means it’s a tool, like a pencil or a computer; the writer is always the person in charge,” Trainor said. “They’re responsible for the knowledge they produce, the content they put out there and the tools they use.”

Zachary Weinstein, a computer science major, with a minor in video game studies, thought AI was a sci-fi phenomenon. He believed AI was a machine that could do human-like and complex tasks.

“We’re entering an era now where we’re starting to see some of that sci-fi become less fiction and more grounded science,” Weinstein said.

Weinstein explained that AI can be divided into deterministic and probabilistic categories. Deterministic AI produces the same output for a given input. Probabilistic AI, also known as machine learning, learns from large datasets and is less predictable.

AI is not a new concept. Weinstein stated that concepts of both deterministic and probabilistic programs have existed for decades. Weinstein points out that we’ve had Deterministic AIs since the ’70s. Something like ChatGPT or inferential AIs has been considered a concept for many years. Weinstein pointed that out to ease some of the tension and show that this technology isn’t that new; it’s just more public-facing now.

“There was a tool in the ‘80s that had what we call natural language. You could give it a prompt and you would get a response in English back. That response would read like normal English and would be grammatically correct. It would sound like it was written by a human,” Weinstein said.

Some of Weinstein’s computer science professors allow ChatGPT for upper-division classes, as ChatGPT would only be used for repetitive boilerplate code. However, not all professors allow the use of ChatGPT.

“On its own, it’s useless. You have to know how to implement it with something else,” Weinstein said. “So you’ll see that with the upper division classes, but lower division classes, they’re very strict about using something like ChatGPT.”

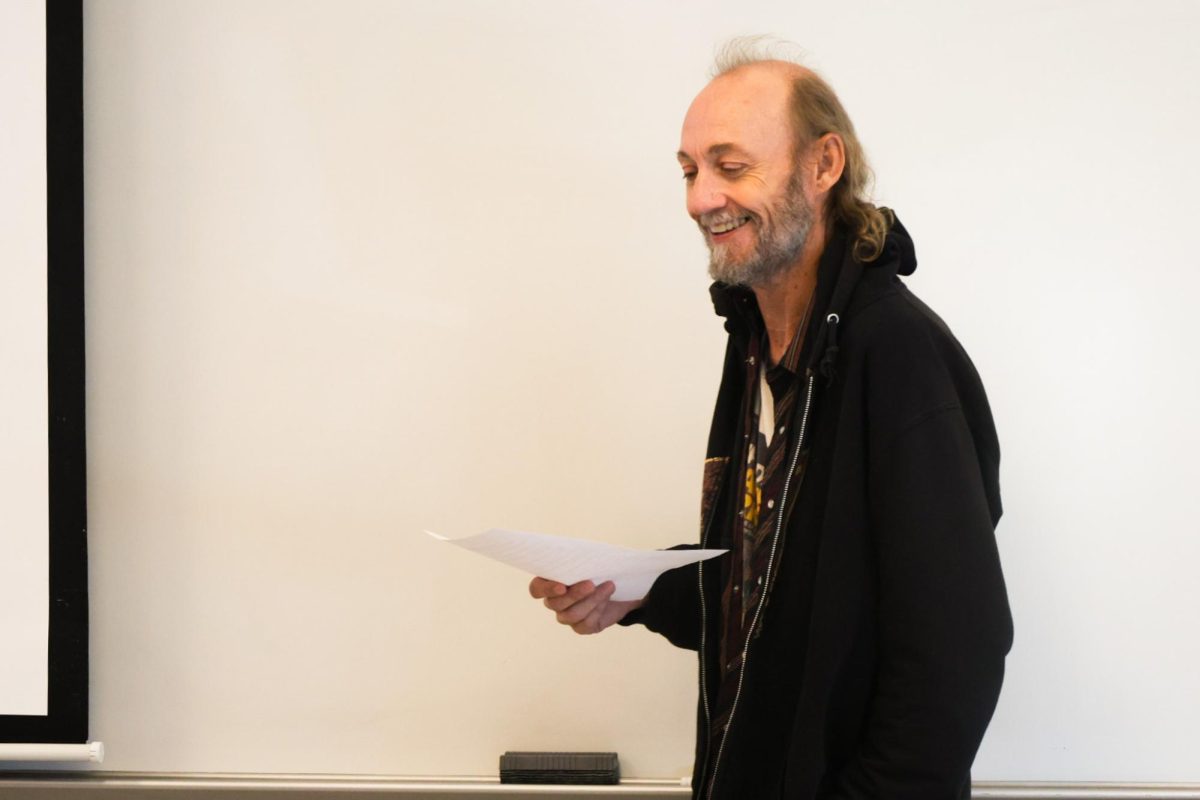

Anastasia Smirnova is an associate professor of linguistics in the English department at SF State. Since Smirnova studies language, she is interested in computational tools that allow humans to produce language.

When ChatGPT was released last November, Smirnova was fascinated by its capabilities and held a seminar called ChatGPT and its Impact on Higher Education.

“I invited speakers from different departments and different disciplines who study natural language technologies and AI. I thought it would be important for us to get together and talk about the effect of this technology on higher education,” she said.

In February, some schools disallowed ChatGPT while others developed rules for its usage, according to Smirnova.

“It’s pretty much an ongoing discussion and my impression is that there are different positions and different opinions on this technology problem,” Smirnova said.

ELIZA, created in the ‘60s, was an early AI program modeled after Rogerian psychotherapy; it mimicked dialogue by repeating questions. ChatGPT, according to Smirnova, is superior to anything that came before it.

“Until recently, I would say that all these programs had limitations; with regard to ChatGPT, what I see in my research lab… is that the system outperforms previous AI systems on the set of natural language understanding benchmarks,” she said.

Smirnova thinks it may take time for professors to accept AI tools like ChatGPT due to concerns about needing to learn how they work and the potential harm, as it can presumably produce incorrect and harmful content.

“There is a possibility of incorrect and harmful content,” she said. “Until this is solved, I don’t think we can say, ‘well sure, we can just go ahead and use it.’ Everyone can use it as they see fit, more research is needed.”

Smirnova believes ChatGPT’s impact on education in the next five to 10 years will make certain topics more accessible. Smirnova gave an example of a current text simplification project she is conducting with her lab team.

She is currently working on text simplification, which involves making complex texts easier to read while also preserving their meaning. Text simplification is important for many groups, but they specifically focus on children’s access to information, where the ChatGPT model outperforms others in this area.

“I think that it will have an impact on how we teach and how we assess in terms of impact on education. I think it will make certain topics more accessible,” Smirnova said.

Nasser Shahrasbi, an associate professor of Information Systems at the Lam Family College of Business, said the college brings experts from different fields to discuss emerging technologies. They also educate students and faculty to keep up with technological progress.

The Lam Family College of Business has many programs to equip students with the latest technologies — for example, the Lam-Larson Initiative Fellow Program.

Shahrasbi also teaches IT courses and oversees programs focusing on emerging technologies, particularly generative AI and large language models for education and business. He wants to create an AI conference in the spring to introduce faculty to AI technologies like ChatGPT and Anthropic. The aim is to enhance teaching and learning and improve student education.

“Whether you want to learn it or not, it impacts life after graduation,” Shahrasbi said. “I think the impact is not only on education but on every single job, both white-collar and blue-collar jobs. If you know how to effectively use these tools, that’s going to be a good skill you can carry,” Shahrasbi said.

AI can aid education, but relying on it too much may affect skills and lead to questioning by employers, according to Shahrasbi.

“There’s a tool or someone that can do it better for me,” Shahrasbi said. “If you use that during your studies, what do you have to offer the job market later? What do you have to offer the companies, even if you are an entrepreneur, is the same thing, what have you made to offer a job?”

Shahrasbi emphasized the importance of proactively utilizing AI due to its rapid and continuous advancement; with even a momentary halt in growth, AI will have lasting impacts for years to come.

“It’s impossible to imagine someone surviving in the job market without knowing how to use current AI systems effectively,” Shahrasbi said. “AI is not just limited to ChatGPT, it has multiple domains, including image recognition and vision AI in healthcare. It will have a significant impact on future generations. Depriving students of AI education is illogical and won’t help them in the job market.”

Read Part II here: SFSU Grapples with Global Surge in AI, How SFSU Navigates the Ever-Evolving Tech Landscape